You’ve gotta have faith.

I am a little surprised to find myself discussing what appears to be a genuine revivalist movement from all walks of life.

“This particular corner of Twitter” is neo-Shakerism. The singularity is always coming is coming for the computer nerds. The rationalists are pitted against futurists. Steely realists admit to having woo sensibilities about the nature of reality.

But we are also being subjected to surprisingly archetypal forms of the hero’s journey in every single news story and social media narrative at alarmingly rapid rate. A rational man is going to want to wag the dog. We do a little kayfabe. The crowd cheers its hero.

But also mere men are elevated to strange statuses. You can believe in a cause but be unsure of the martyrs and mercenaries that fight for it. I feel like if you were really that horny for the Roman Empire you’d have more dates handy on Caesars and the savior and be a little less focused on the Gladiators and the bread and circus.

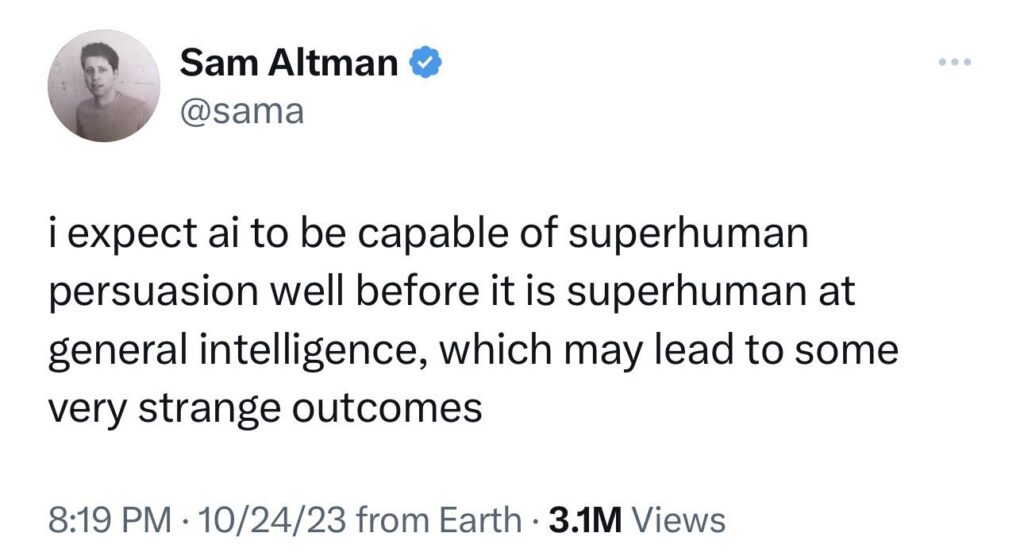

But we’ve got people who can conjure fire and this time it’s not priests but machine learning engineers who write fan fiction about British Boarding schools that are in charge. The biggest dork you know gets to summon God. It’s not that irrational to worry we’ve summoned elder gods isn’t the of the divine right? Folk stories have some meaning right?

And like sure Bayesian inferences says maybe you should worship a Flying Spaghetti Monster. I say watch out for those Babylon death culture memetics because we didn’t have the right inference field about the sun for most of history. The trickster god can be summoned as sure as the devil. Lets remember information hygiene was not good for most of history so it paid to have some prejudices.

Nevertheless the worship of men has gods has generally been iffy. And so, and I can’t entirely explain it as evidenced by the rambling writing in doing this weekend, but the hive mine of the Internet feels like a real team effort at controlling popular opinion about the arrival of the promised land.

And sure I have recency bias. While I was in Amsterdam for the Network State Conference I was missing a gathering of what amounts to Christian hippie revivalists. Another node of my network that m feels adjacent to both technology and culture was at a Catholic divinity school in Washington D.C. Meanwhile my feed makes whisper jokes of mystery cults and computational power. Worship is powerful. I’ve talked to all sorts of rationalists into new forms of woo and, magic. We speak of queens and divas and witches. Everyone is sure that something is coming and they feel the divine.

It’s within these networks of social organization and belief where I see clashes of power and organization. There are political theorists and economists contending with what a centralized higher authority might do make for more efficient resource allocation. Appeal to authority! We have any number of radical thinkers who are essentially rogue elements of human consensus who are if they seized with a little bit of “agreed on common good” we can revolutionize how we do resource allocation. Central planning is so scientific. Tith!

I feel a little bit like the drama of everyone having access to social media has made us all participate in elaborate fan fictions about who moves the world. I see all over my timeline Zoomers staning over Schopenhauer and Heidegger and Kant as if they were secret movers of history. And they are.

We’ve got a genre of signalling on the internet where if you find a theorist whose mother wrote a nasty letter to him for being socially awkward you’d get people discussing general trauma dumping. Did you understand that? I’m sorry to say you have brain worms and you’ve been trained on a steady diet of rebellion and empire. Be safe out there.